The goal of our lab is to define how neurons from different cortical areas interact to realize our perception of shape and motion.

We study the brain of the rhesus macaque, recording action potentials from neurons across the cortico-visual hierarchy, including V1, V2, V4, inferotemporal cortex, prefrontal cortex, and the thalamus.

We use computational models such as deep neural networks to generate predictions about neural responses. These models help us formulate and test hypotheses about how visual information is represented and transformed across brain areas. We also use them to advance mechanistic interpretability in vivo and in silico.

To achieve this goal, we need animals to perform behavioral tasks, and so we use modern techniques (including computer-based automated systems) to train the animals humanely and efficiently. We record from their brains using chronically implanted microelectrode arrays, which yield large amounts of data quickly, and sometimes also using single electrodes for novel exploratory projects (i.e. our moonshot division!). While recording, we also can use activity manipulation techniques (like cortical cooling, optogenetics and chemogenetics) to affect cortical inputs to the neurons under study, and establish results that are causal, not just correlational.

Solving the problem of visual recognition at the intersection of visual neuroscience and machine learning will yield applications that will improve automated visual recognition in fields like medical imaging, security and self-driving vehicles. But just as importantly, it will illuminate how our inner experience of the visual world comes to be.

An explainer video from the Society for Neuroscience meeting on Mueller, Carter, Kansupada and Ponce (2023):

One explanation of a common technique in the lab:

Some explainer videos from the Society for Neuroscience meeting:

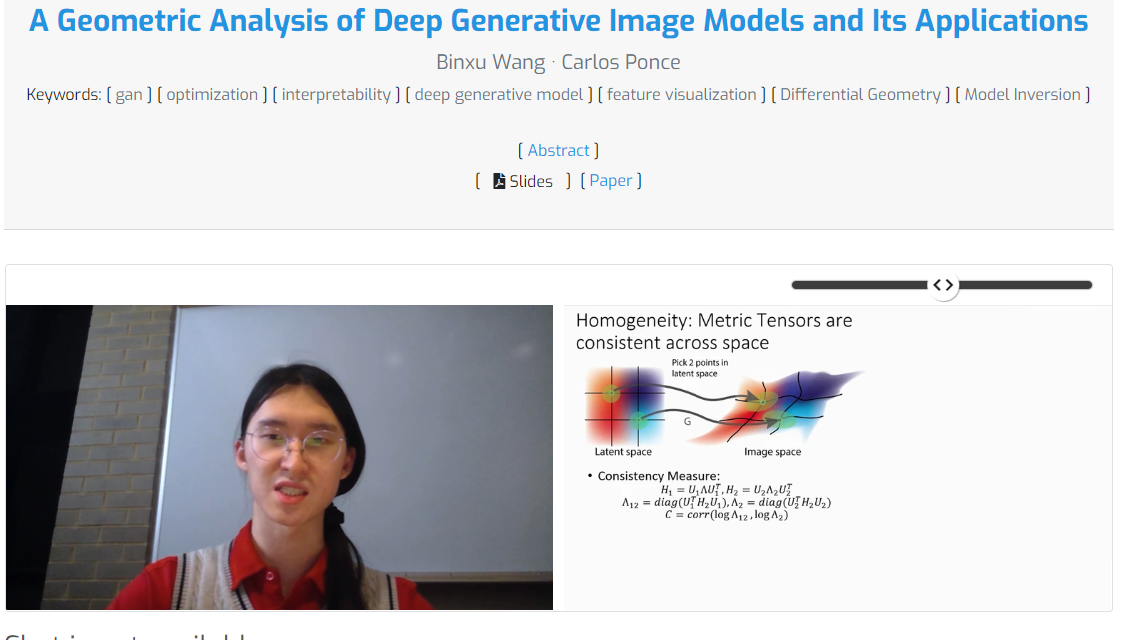

An explainer video on our ICLR 2021 paper, by Binxu Wang:

https://iclr.cc/virtual/2021/poster/3366